| Authors: | Danny E. P. Vanpoucke |

| Journal: | Computational Materials Science 181, 109736 (2020) |

| doi: | 10.1016/j.commatsci.2020.109736 |

| IF(2019): | 2.863 |

| export: | bibtex |

| pdf: | <ComputMaterSci> (Open Access) |

| github: | <Hive-toolbox> |

|

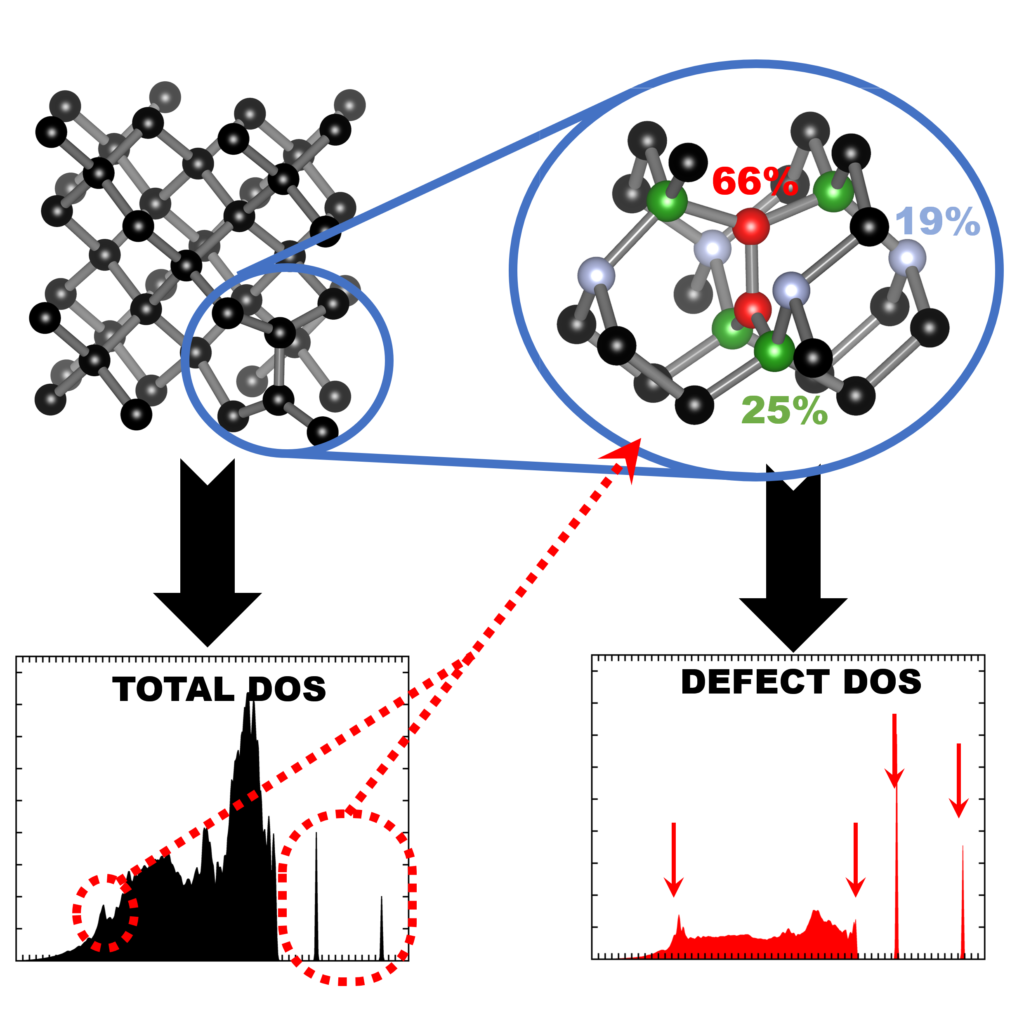

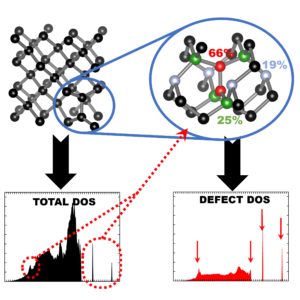

| Graphical Abstract: Finger printing defects in diamond through the creation of the vibrational spectrum of a defect. |

Abstract

Vibrational spectroscopy techniques are some of the most-used tools for materials

characterization. Their simulation is therefore of significant interest, but commonly

performed using low cost approximate computational methods, such as force-fields.

Highly accurate quantum-mechanical methods, on the other hand are generally only used

in the context of molecules or small unit cell solids. For extended solid systems,

such as defects, the computational cost of plane wave based quantum mechanical simulations

remains prohibitive for routine calculations. In this work, we present a computational scheme

for isolating the vibrational spectrum of a defect in a solid. By quantifying the defect character

of the atom-projected vibrational spectra, the contributing atoms are identified and the strength

of their contribution determined. This method could be used to systematically improve phonon

fragment calculations. More interestingly, using the atom-projected vibrational spectra of the

defect atoms directly, it is possible to obtain a well-converged defect spectrum at lower

computational cost, which also incorporates the host-lattice interactions. Using diamond as

the host material, four point-defect test cases, each presenting a distinctly different

vibrational behaviour, are considered: a heavy substitutional dopant (Eu), two intrinsic

point-defects (neutral vacancy and split interstitial), and the negatively charged N-vacancy

center. The heavy dopant and split interstitial present localized modes at low and high

frequencies, respectively, showing little overlap with the host spectrum. In contrast, the

neutral vacancy and the N-vacancy center show a broad contribution to the upper spectral range

of the host spectrum, making them challenging to extract. Independent of the vibrational behaviour,

the main atoms contributing to the defect spectrum can be clearly identified. Recombination of

their atom-projected spectra results in the isolated spectrum of the point-defect.

A new year, a new beginning.

A new year, a new beginning.