Black arts of computational materials science.

Just over half a year ago, I mentioned that I presented two computational materials science related projects for the third bachelor physics students at the UHasselt. Both projects ended up being chosen by a bachelor student, so I had the pleasure of guiding two eager young minds in their first steps into the world of computational materials science. They worked very hard, cursed their machine or code (as any good computational scientist should do once in a while, just to make sure that he/she is still at the forefront of science) and survived. They actually did quite a bit more than “just surviving”, they grew as scientists and they grew in self-confidence…given time I believe they may even thrive within this field of research.

One week ago, they presented their results in a final presentation for their classmates and supervisors. The self-confidence of Giel, and the clarity of his story was impressive. Giel has a knack for storytelling in (a true Pan Narrans as Terry Pratchett would praise him). His report included an introduction to various topics of solid state physics and computational materials science in which you never notice how complicated the topic actually is. He just takes you along for the ride, and the story unfolds in a very natural fashion. This shows how well he understands what he is writing about.

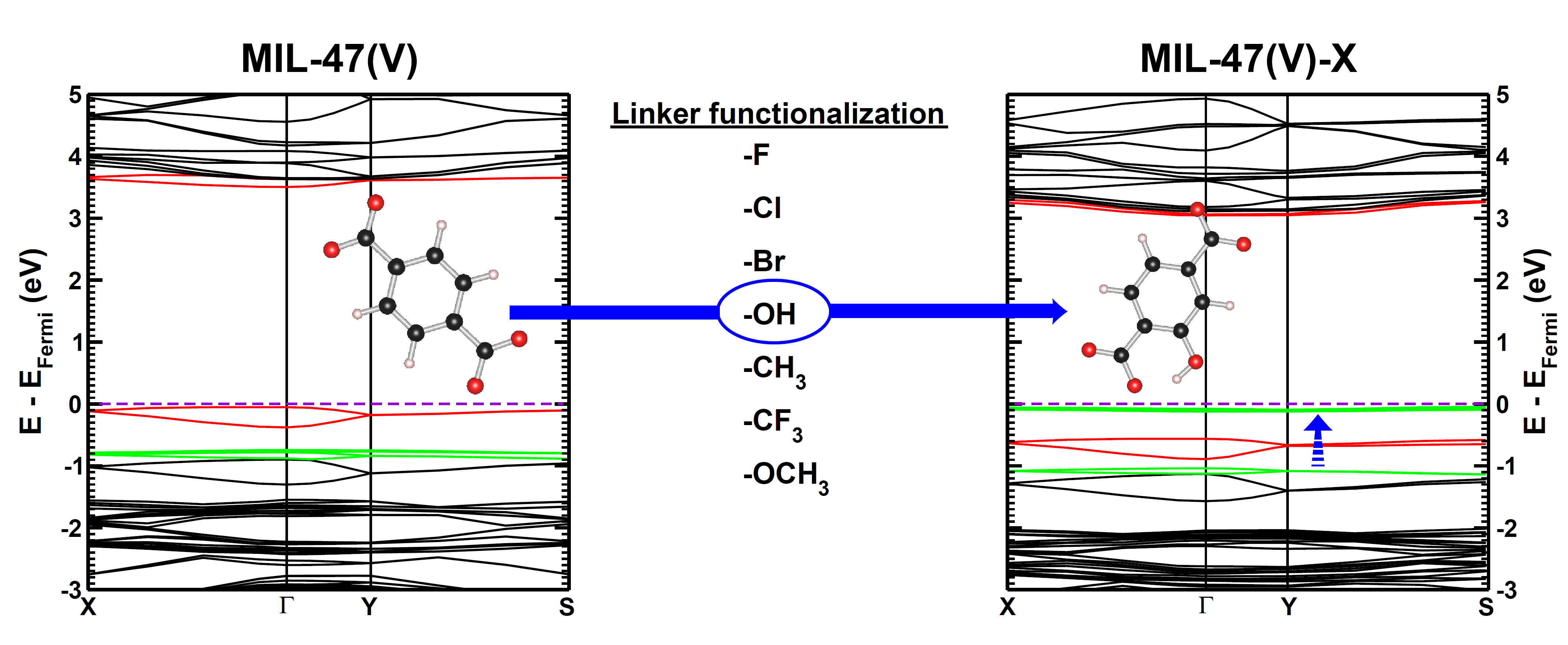

This, in no way means his project was simple or easy. Quite soon, at the start of his project Giel actually ran into a previously unknown VASP bug. He had to play with spin-configurations of defects and of course bumped into a hand full of rookie mistakes which he only made once *thumbs-up*. (I could have warned him for them, but I believe people learn more if they bump their heads themselves. This project provided the perfect opportunity to do so in a safe environment. 😎 ) His end report was impressive and his results on the Ge-defect in diamond are of very good quality.

The second project was brought to a successful completion by Asja. This very eager student actually had to learn how to program in fortran before he could even start. He had to implement code to calculate partial phonon densities with the existing HIVE code. Along the way he also discovered some minor bugs (Thank you very much 🙂 ) and crashed into a rather unexpected hard one near the end of the project. For some time, things looked very bleak indeed: the partial density of equivalent atoms was different, and the sum of all partial densities did not sum to the total density. As a result there grew some doubts if it would be possible to even fulfill the goal of the project. Luckily, Asja never gave up and stayed positive, and after half a day of debugging on my part the culprit was found (in my part of the code as well). Fixing this he quickly started torturing his own laptop calculating partial phonon densities of state for Metal-organic frameworks and later-on also the Ge-defect in diamond, with data provided by Giel. Also these results are very promising and will require some further digging, but they will definitely be very interesting.

For me, it has been an interesting experience, and I count myself lucky with these two brave and very committed students. I wish them all the best of luck for the future, and maybe we meet again.