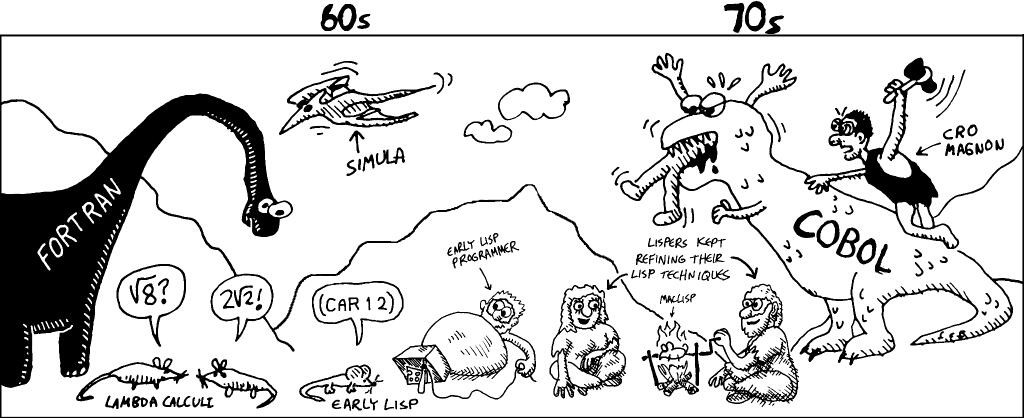

Fortran, just like COBOL (not to be confused with cobold), is a programming language which is most widely known for its predicted demise. It has been around for over half a century, making it the oldest high level programming language. Due to this age, it is perceived by many to be a language that should have gone the way of the dinosaur a long time ago, however, it seems to persist, despite futile attempts to retire it. One of the main concerns is that the language is not up to date, and there are so many nice high level languages which allow you to do things much easier and “as fast”. Before we look into that, it is important to know that there are roughly two types of programming languages:

- Compiled languages (e.g. Fortran, C/C++, Pascal)

- Interpreted and scripting languages (e.g. Java, PHP, Python, Perl)

The former languages result in binary code that is executed directly on the machine of the user. These programs are generally speaking fast and efficient. Their drawback, however, is that they are not very transferable (different hardware, e.g. 32 vs. 64 bit, tend to be a problem). In contrast, interpreted languages are ‘compiled/interpreted’ at run-time by additional software installed on the machine of the user (e.g. JVM: Java virtual machine), making these scripts rather slow and inefficient since they are reinterpreted on each run. Their advantage, however, is their transferability and ease of use. Note that Java is a bit of a borderline case; it is not entirely a full programming language like C and Fortran, since it requires a JVM to run, however, it is also not a pure scripting language like python or bash where the language is mainly used to glue other programs together.

Now let us return to Fortran. As seen above, it is a compiled language, making it pretty fast. It was designed for scientific number-crunching purposes (FORTRAN comes from FORmula TRANslation) and as such it is used in many numerical high performance libraries (e.g. (sca) LAPACK and BLAS). The fact that it appeared in 1957 does not mean nothing has happened since. Over the years the language evolved from a procedural language, in FORTRAN II, to one supporting also modern Object Oriented Programming (OOP) techniques, in Fortran 2003. It is true that new techniques were introduced later than it was the case in other languages (e.g. the OOP concept), and many existing scientific codes contain quite some “old school” FORTRAN 77. This gives the impression that the language is rather limited compared to a modern language like C++.

So why is it still in use? Due to its age, many (numerical) libraries exist written in Fortran, and due to its performance in a HPC environment. This last point is also a cause of much debate between Fortran and C adepts. Which one is faster? This depends on many things. Over the years compilers have been developed for both languages aiming at speed. In addition to the compiler, also the programming skills of the scientist writing the code are of importance. As a result, comparative tests end up showing very little difference in performance [link1, link2]. In the end, for scientific programming, I think the most important aspect to consider is the fact that most scientist are not that good at programming as they would like/think (author included), and as such, the difference between C(++) and Fortran speeds for new projects will mainly be due to this lack of skills.

However, if you have no previous programming experience, I think Fortran may be easier and safer to learn (you can not play with pointers as is possible with C(++) and Pascal, which is a good thing, and you are required to define your variables, another good coding practice (Okay, you can use implicit typing in theory, but more or less everybody will suggest against this, since it is bad coding practice)). It is also easier to write more or less bug free code than is possible in C(++) (remember defining a global constant PI and ending up with the integer value 3 instead of 3.1415…). Also its long standing procedural setup keeps things a bit more simple, without the need to dive into the nitty gritty details of OOP, where you should know that you are handling pointers (This may be news for people used to Java, and explain some odd unexpected behavior) and getting to grips with concepts like inheritance and polymorphism, which, to my opinion, are rather complex in C++.

In addition, Fortran allows you to grow, while retaining ‘old’ code. You can start out with simple procedural design (Fortran 95) and move toward Object Oriented Programming (Fortran 2003) easily. My own Fortran code is a mixture of Fortran 95 and Fortran 2003. (Note for those who think code written using OOP is much slower than procedural programming: you should set the relevant compiler flags, like –ipo )

In conclusion, we end up with a programming language which is fast, if not the fastest, and contains most modern features (like OOP). Unlike some more recent languages, it has a more limited user base since it is not that extensively used for commercial purposes, leading to a slower development of the compilers (though these are moving along nicely, and probably will still be moving along nicely when most of the new languages have already been forgotten again). Tracking the popularity of programming languages is a nice pastime, which will generally show you C/C++ being one of the most popular languages, while languages like Pascal and Fortran dangle somewhere around 20th-40th position, and remain there over long timescales.

The fact that Fortran is considered rather obscure by proponents of newer scripting languages like Python can lead to slightly funny comment like:“Why don’t you use Python instead of such an old and deprecated language, it is so such easier to use and with the NumPy and SciPy library you can also do number-crunching.”. First of all, Python is a scripting language (which in my mind unfortunately puts it at about the same level as HTML and CSS 🙂 ), but more interestingly, those libraries are merely wrappers around C-wrappers around FORTRAN 77 libraries like MINPACK. So people suggesting to use Python over Fortran 95/2003 code, are actually suggesting using FORTRAN 77 over more recent Fortran standards. Such comments just put a smile on my face. 😀 With all this in mind, I hope to show in this blog that modern Fortran can tackle all challenges of modern scientific programming.