Once upon a time…

Once upon a time, a long time ago—21 days ago to be precise—there was a conference in the tranquil town of Hasselt. Every year, for 23 years in a row, researchers gathered there for three full days, to present and adore their most colorful and largest diamonds. For three full days, there was just that little bit more of a sparkle to their eyes. They divulged where new diamonds could be found, and how they could be used. Three days to could speak without any restriction, without hesitation, about the sixth element which bonds them all. Because all knew the language. They honored the magic of the NV-center and the arcane incantations leading to the highest doping. All, masters of their common mystic craft.

At the end of the third day, with sadness in their harts they said their good-byes and went back, in small groups, to their own ivory tower, far far away. With them, however, they took a small sparkle of hope and expectation, because in twelve full moons they would reconvene. Bringing with them new and grander tales and even more sparkling diamonds, than had ever been seen before.

For most outsiders, the average conference presentation is as clear as an arcane conjuration of a mythological beast. As scientist, we are often trapped by the assumption that our unique expertise is common knowledge for our public, a side-effect of our enthusiasm for our own work.

Clear vs. accurate

In a world where science is facing constant pressure due to the financing model employed—in addition to the up-rise in “fake news” and “alternative facts”— it is important for young researchers to be able to bring their story clearly and accurately.

However, clear and accurate often have the bad habit of counteracting one-another, and as such, maintaining a good balance between the two take a lot more effort than one might expect. Focus on either one aspect (accuracy or clarity) tends to be disastrous. Conference presentations and scientific publications tend to focus on accuracy, making them not clear at all for the non-initiate. Public presentations and news paper articles, on the other hand, focus mainly on clarity with fake news accidents waiting to happen. For example, one could recently read that 7% of the DNA of the astronaut Scott Kelly had changed during a space-flight, instead of a change of in gene-expression. Although both things may look similar, they are very different. The latter presents a rather natural response of the (human) body to any stress situation. The former, however, removes Scott from the human race entirely. Even the average gorilla would be closer related to you and I, than Scott Kelly, as they differ less than 5% in their DNA from our DNA. So keeping a good balance between clarity and accuracy is important, albeit not that easy. Time pressure plays an important role here.

Two extremes?

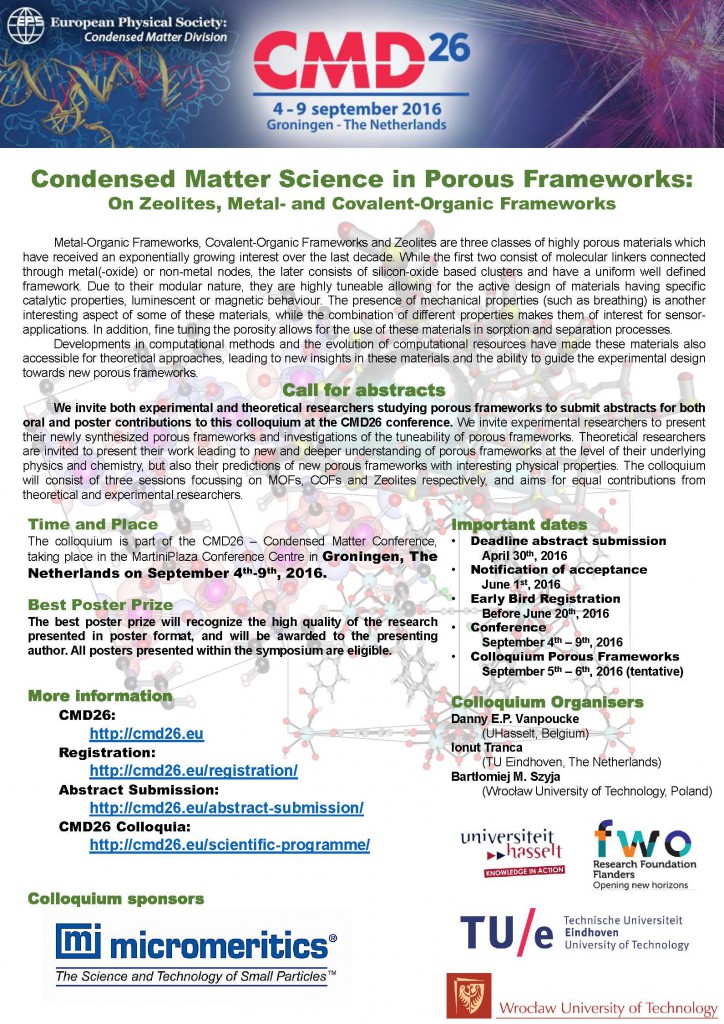

Wetenschapsbattle Trophy: Each of the contestants of the wetenschapsbattle received a specially designed and created hat from the children of the school judging the contest. Mine has diamonds and computers. 🙂

In the week following the diamond conference in Hasselt, I also participated in a sciencebattle. A contest in which researchers have to explain their research to a public of 6-to 12-year-olds in a time-span of 15 minutes. These kids are judge, jury and executioner of the contest so to speak. It’s a natural reflex to place these two events at the opposite ends of a scale. And it is certainly true for some aspects; The entire room volunteering spontaneously when asked for help is something which happens somewhat less often at a scientific conference. However, clarity and accuracy should be equally central aspects for both.

So, how do you explain your complex research story to a crowd of 6-to 12-year-olds? I discovered the answer during a masterclass by The Floor is Yours. Actually, more or less the same way you should tell it to an audience of adults, or even your own colleagues. As a researcher you are a specialist in a very narrow field, which means that no-one will loose out when focus is shifted a bit more to clarity. The main problem you encounter here, however, is time. This is both the time required to tell your story (forget “elevator pitches”, those are good if you are a used-car salesman, they are not for science) as well as the time required to prepare your story (it took me a few weeks to build and then polish my story for the children).

Most of this time is spent answering the questions: “What am I actually doing?” and “Why am I doing this specifically?“. The quest for metaphors which are both clear and accurate takes quite some time. During this task you tend to suffer, as a scientist, from the combination of your need for accuracy and your deep background knowledge. These are the same inhibitors a scientist encounters when involved in a public discussion on his/her own field of expertise.

Of course you also do not want to be pedantic:

Q: What do you do?

A: I am a Computational Materials Researcher.

Q: Compu-what??

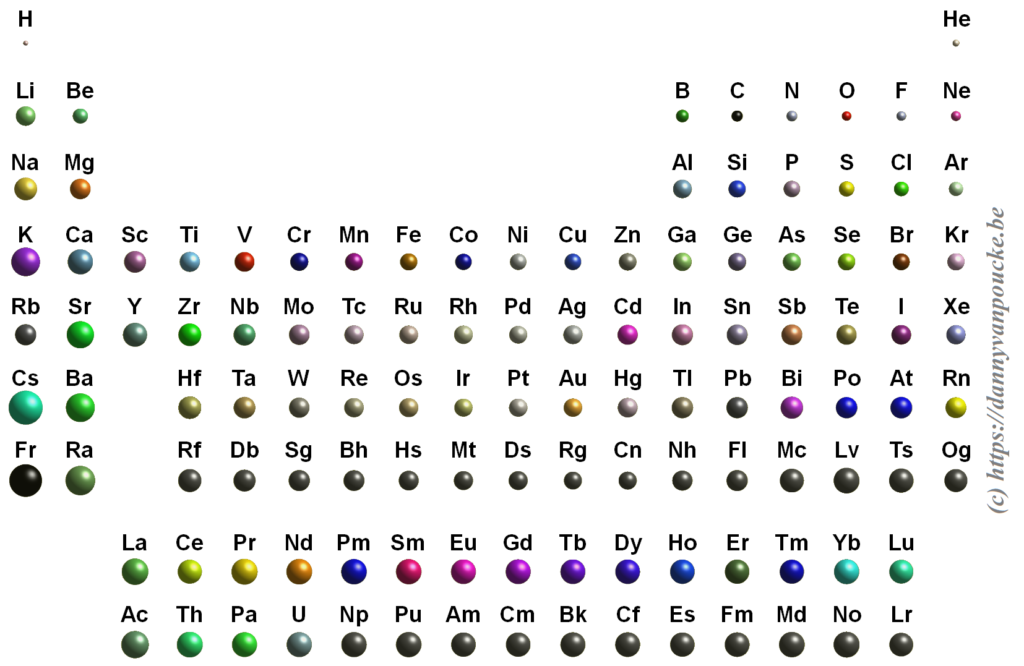

A: 1) Computational = using a computer

2) Materials = everything you see around you, the stuff everything is made of

3) Researcher = Me

However, as a scientist, you may want to use such imaginary discussions during your preparation. Starting from these pedantic dialogues, you trace a path along the answers which interest you most. The topics which touch your scientific personality. This way, you take a step back from your direct research, and get a more broad picture. Also, by telling about theme’s, you present your research from a more broad perspective, which is more easily accessible to your audience: “What are atoms?“, “How do you make diamond?“, “What is a computer simulation?”

At the end—after much blood, sweat and tears—your story tells something about your world as a whole. Depending on your audience you can include more or less detailed aspects of your actual day-to-day research, but at its hart, it remains a story.

Because, if we like it or not, in essence we all are “Pan narrans“, storytelling apes.

This summer, I had the pleasure of being interviewed by

This summer, I had the pleasure of being interviewed by